Especially when you're exploiting hard links. While you're attempting to do anything that needs to sync millions of files across a large latency link you're pretty screwed. If I can dig up the name I will post about it. The third option is a utility I have used that does a lot of what rsync does, but faster. Testing with apache and curl/wget to fetch files is the quick and dirty way to see if it is faster or not.

While encryption is generally much faster these days it is still a significant load and can force single threaded comms, slowing you down. Root only excutable, run on a non-standard port, use the job to start and stop the server peice so it is not up and running all the time. PUT THEM SOMEWHERE SPECIAL, not in the normal bin spaces. You can then call ssh/scp with a "nocrypt" option when you set the encryption scheme. Use it with existing commands or use the parallel find/xargs example I put elsewhere. The exact flag may be different than I remember. The other option is complile a version of SSH/SSHD with -no-encryption enabled. This assumes you arent using the internet but have dedicated links. I have filled 2x10GB pipes with this, so be careful! it can be crazy efficent. lftp, wget and curl can use http (no encryption) to efficently fetch the files. Why? Apache is INSANELY efficent at serving up millions of small files in parallel, literally the use case here. Set up an Apache server to serve up files. Your expertise and support have been instrumental in shaping my decision. I'm truly grateful for all the valuable input and guidance from this community.

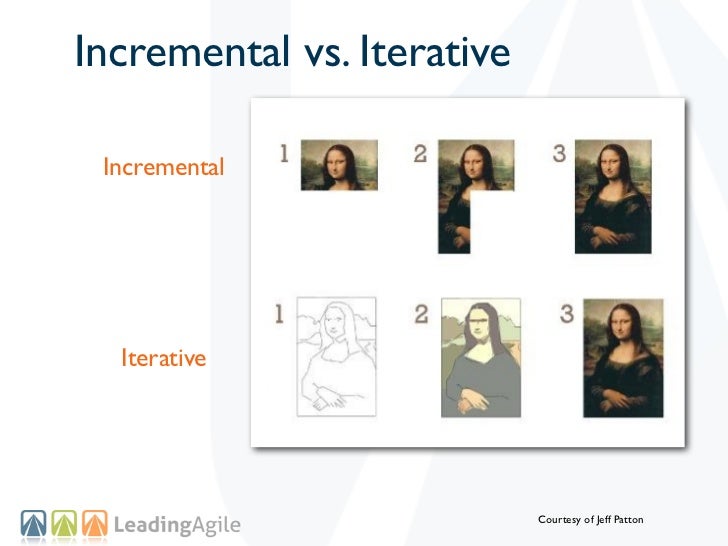

#Arrsync incremental vs whole file reddit update#

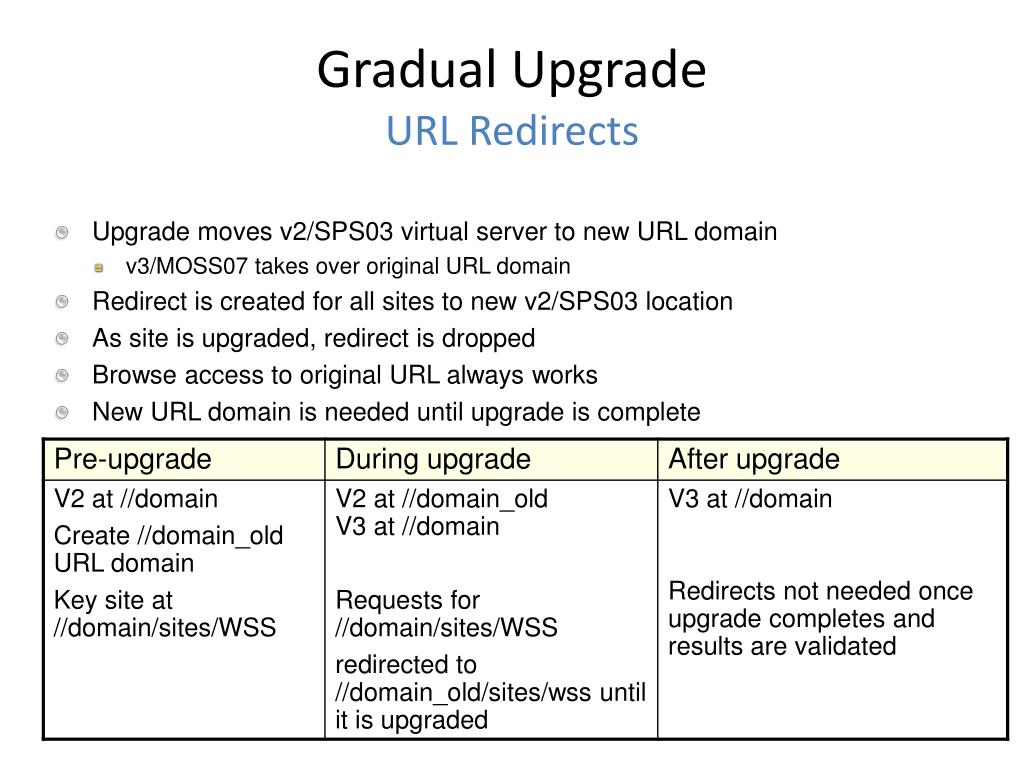

I'll be working on implementing it this week and will provide an update soon. Rsync -azHe 'ssh -p 50900' -delete-after -progress /backupĪfter carefully considering all the insightful comments and suggestions, it seems like ZFS replication is the way to go. I want to transfer this backup to an offsite server preserving hardlinks.Ĭurrently, Im running the following command. The source has a daily incremental backup of the last 10 days which uses hardlinks. Therefore, I'm curious if there are better tools available for handling this massive task.Do you have any suggestions for alternative tools or techniques that can efficiently handle this transfer? I would greatly appreciate your insights! Typically, I rely on rsync for file transfers, but it's taking a considerable amount of time for calculations in this case. Hi everyone, I have an incremental backup of 1500GB, which consists of around 17 million files, that need to be transferred from one location to another location daily.

0 kommentar(er)

0 kommentar(er)